This week, Calipsa's CTO Boris Ploix demystifies the topic of big data; what is it, and why is it so important to modern technology?

For the last ten years, the expression "big data" has been used almost everywhere, and in one way or another most successful companies have used it in their products. It has been considered by some a technology revolution; by others by the next big threat for humanity or the end of privacy. So what does it really mean and why does it matter?

Invented in the 1990s, big data refers to the ability to process, extract and analyse information from a very large dataset, which could not be dealt with by traditional software. Even though the boundaries of what qualifies big data or not don’t follow a strict definition, it is usually associated with three main factors: volume, variety and velocity.

When we talk about volume, we are not really talking about the size of each individual file, but rather the amount of data that needs to be processed. There is no strict definition or volume threshold to qualify exactly what is or is not “big” data; as the capacity increases over time, this imaginary threshold would grow exponentially anyway.

What we mean by variety is that the data in question could be in any format. We can identify three kinds of data: structured, semi structured and unstructured.

| Structured | Semi-structured | Unstructured |

| Data that is well structured and well organised. The content of the data can vary but its format is very predictable - it fits into a table which can be easily searchable. | Data that is well-structured but unorganised. The basic structure of this data is expected to be the same but due its unorganised nature, the content will be harder to search. | Data that is not structured and not organised. Searching is not straightforward at all and requires advanced tools to extract information. |

| e.g. rows in a table | e.g. CSV files | e.g. image and video files |

Finally, velocity refers to the amount of data we are processing per unit of time. Computers are able to ingest and process thousands of data points per second. Those operations can be done completely independently and in parallel, without using a queuing system that would impose any kind of delay.

Why has big data become a buzzword in recent years?

Advances in hardware technologies were one of the biggest driving factors that enabled large-scale processing to take place. Let’s take a look at some of these big data solutions in more detail.

Storage

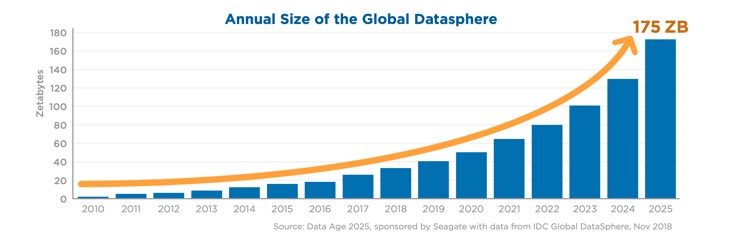

It has never been cheaper to store data and it is becoming more and more inexpensive. If we look at the global data storage capacity, it has been growing exponentially for the last thirty years. According to IDC's white paper, The Digitization of the World – From Edge to Core (Nov 2018), data storage is set to grow exponentially as our reliance on data increases. By 2025, global data storage is set to reach 175 zettabytes:

Processing power

The famous Moore's law established that processing power would double every 18 months, and this has been consistently true for the last 30 years. The exponential growth of the speed in which data can be processed has allowed more complex operations on a much larger scale.

Cost of hardware

The last element is the cost of hardware - it has never been cheaper to both store and process data. This operation used to require some big servers inside ventilated rooms, but it can now be done completely virtually in the cloud without any hardware management for the owner.

Why is big data so important nowadays?

Having the ability to process and analyse a large quantity of continuous data is what made artificial intelligence, specifically machine learning, successful beyond the academic world. At its core, machine learning is a set of techniques that makes it possible to learn some predictable behaviour or make some smart classifications based on historical data.

Machine learning techniques have enabled us to automate certain processes in ways that simply wouldn’t have been possible just a few years ago. Let’s take an example in order to illustrate this. Calipsa’s mission is to help detect and prevent crime around the world. To do this, we connect to CCTV cameras and use machine learning to understand what is going on, and recognise elements inside a scene.

If the goal is to detect a person inside an image, instead of creating some smart rules to understand what a person should look like, we feed our machine learning models a lot of images with different people in it in order to build a generalised representation of what a person should look like. It is easy to imagine that this model will be able to extract more features and information if it has seen one million people, rather than just one or two.

Due to the statistical nature of machine learning models which try to extract trends and correlations from a large dataset, the more data you have, then the better the predictions will be. That is the reason why the more alarms we are able to process and store, the more accurate our system becomes over time.

Our ability to use big data and process millions of alarms every day really enables Calipsa to utilise the full potential of machine learning to provide a lot of value to businesses around the world.

What about data privacy?

Sometimes, big data is wrongly associated with Big Brother. However, this doesn’t need to be the case, and it’s important that businesses respect people’s privacy and use data responsibly. At Calipsa, we make sure that all stored data is completely anonymised to be compliant with GDPR, but also to ensure the total privacy of our customers.

It is interesting to note that these restrictions do not put any constraints on the performance of the accuracy of our machine learning model. The statistical model does not need to have personalised data or any kind of context to be able to make predictions; looking at an aggregation of data is more than enough to reach an accuracy optimum and the context does not need to be taken into account.

What does this mean for the future?

In the Information Age, more data means better quality - so the service provided by Calipsa will only increase with the scale. The more people using the platform, the better the accuracy will be, thanks to our ability to process and analyse big data.

Want to learn even more about all things technology? Download our latest ebook, Machine learning explained: the 3 essentials for video analytics.

Editor’s Note: This post was originally published in October 2020 and has been updated for accuracy and comprehensiveness.

No comments